what a proxy is (educational, non-abuse) — practical tips and tricks for infrastructure teams.

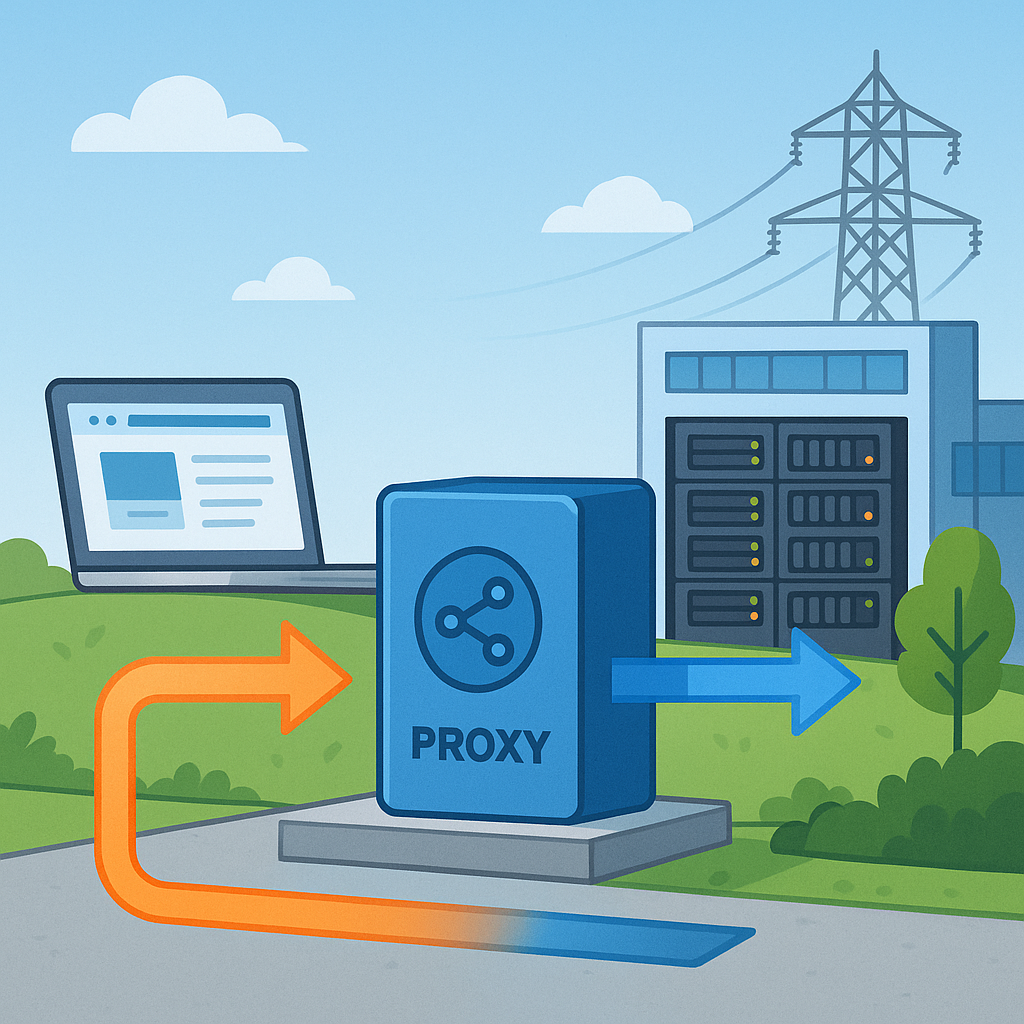

A proxy is an intermediary service that sits between a client and a destination server and relays requests and responses on behalf of the client. In infrastructure terms, a proxy can provide caching, access control, logging, protocol translation and traffic shaping, which helps organisations manage how services are consumed and how resources are protected. Thinking of a proxy as a controlled doorway is useful because it emphasises the role of policy enforcement, monitoring and optimisation rather than any notion of hiding behaviour or evading restrictions.

There are several flavours of proxy and each is suited to particular infrastructure needs, so it helps to understand the basic distinctions. A forward proxy handles outbound requests from clients to the wider internet and can be used for caching, enforcing browsing policies and consolidating outbound traffic through a central gateway. A reverse proxy fronts one or more backend servers, offering load balancing, TLS termination and application firewall capabilities. Transparent proxies operate without explicit client configuration, while SOCKS proxies support lower-level protocols and tunnelling for legitimate network services. Choosing the right type depends on the traffic patterns and security objectives of your environment.

When selecting or configuring a proxy, focus on a handful of practical criteria that impact reliability and observability. Prioritise protocol compatibility and support for modern TLS versions, then consider performance characteristics such as connection pooling, keepalive behaviour and concurrency limits. Look for robust authentication and authorisation features, flexible logging and header manipulation, and a clear story for error handling and health checks. Also consider whether the proxy supports caching with appropriate cache-control handling and stale-if-error policies to improve resilience during backend failures.

- Define the proxy's role clearly before deployment to avoid feature creep and complexity.

- Map expected traffic patterns and set connection, request and idle timeouts accordingly to prevent resource exhaustion.

- Use consistent header practices so downstream services can trust provenance metadata such as X-Forwarded-For or Forwarded headers.

- Enable structured logging and correlate logs with request IDs for easier troubleshooting and tracing.

- Test TLS termination and certificate rotation processes to ensure ongoing trust without manual intervention.

Deployment placement is a decisive factor in how effective a proxy will be, so choose locations that align with the proxy role and traffic flows. Place reverse proxies close to your edge to reduce latency for TLS termination and load balancing, and position forward proxies where they can consolidate and secure outbound traffic. In cloud environments you may combine edge-managed proxies with application-level proxies to balance security, observability and cost. Keep configuration management and templating in version control so changes are auditable and reproducible.

Monitoring and maintenance deserve as much attention as initial configuration because proxies become critical control points in a mature infrastructure. Track request latency, error rates, backend health, cache hit ratios and connection utilisation as baseline metrics, and create alerts for sudden deviations from normal behaviour. Retain logs long enough to support forensic analysis while respecting privacy by minimising sensitive data in logs. Regularly exercise failover paths and perform capacity testing so that the proxy does not become a single point of failure during traffic spikes.

Security and governance for proxies should emphasise least privilege, patching and clear access controls rather than complex obfuscation practices. Ensure the proxy process runs with restricted privileges, apply security updates promptly, and enforce authentication for administrative interfaces. Use TLS for upstream and downstream connections where appropriate, and consider additional controls such as IP allow-lists, rate-limiting, and request validation to reduce attack surface. For further reading and related posts on managing these components within an infrastructure context, see the Infrastructure label. For more builds and experiments, visit my main RC projects page.

Comments

Post a Comment